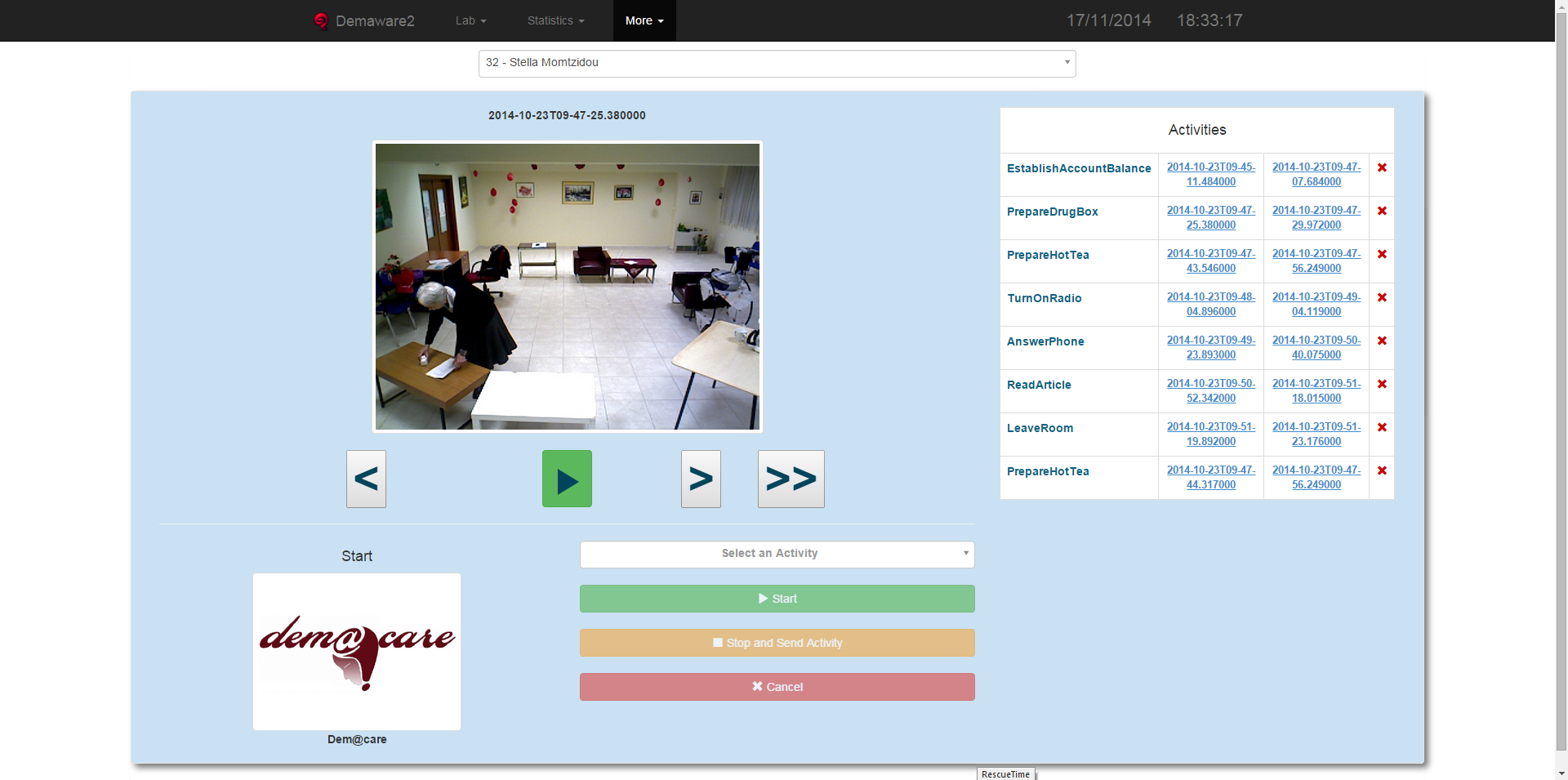

Video Annotation Tool

The DemaWare2 Video Annotation tool enables researchers to manually mark image datasets with activity data for benchmarking. The tool implements a web-based player-like environment that can go through images recorded for each participant in lab trials. At the same time, the user can pick a list of activities, their starting and ending points as observing them.

When using RGB-D cameras, only RGB is used. Therefore, the tool servers two purposes: a) to allow pilots annotate their data to serve as ground truth for various algorithms b) to enable developers as well as pilots to go through the recordings and observe the experiment afterwards. The final output of activities and timestamps is exported in a universal format (XML) usable beyond the Dem@Care methods and scope.

Download more information and instructions

Contact: Thanos Stavropoulos – athstavr@iti.gr

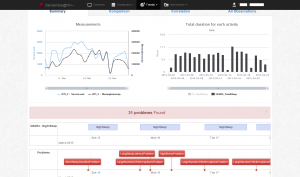

inSAIN

inSAIN (Integrated Sensors, Analytics and Interpretation) is a platform developed and owned by MKLab that homogeneously integrates devices and intelligent analytics such as visual sensing, knowledge modelling and interpretation, along with tailored visualizations. The platform, original emerging from the Dem@Care FP7 system, addresses the needs of modern environments such as Ambient Assisted Living, Ambient Intelligence and the Internet of Things. inSAIN capitalizes on domain-independent device integration, interoperability, reuse and homogeneous visualization and interpretation that can adapt to any domain, such as Smart Homes, eHealth and mHealth scenarios, agriculture and retail.

Contact: Thanos Stavropoulos – athstavr@iti.gr

ARWC Software Module

ARWC (Activity Recognition from Wearable Camera)

The ARWC component analyses frames from egocentric-video camera recordings in order to detect instrumental activities of daily living of a wearer. ARWC is composed of ORWC and RRWC which are respectively object recognition and room (location) recognition.The module input are confidences of obects from a pre-defined set of catégories and locations from predefined set of categories as well. It applyies temporal features computation algorithm and supervised machine learning approach (SVM) to recognized Instrumental activities from a given set of categorie

ORWC(Object Recognition from Wearabe Camera)

The ORWC component uses machine learning approach with prediction of salient areas in video frames. It has to be trained with a certain amount of annotated egocentric fvideo frames to generate a visual dictionnary and multiclass object classifier. It ensures recognition of objects from a predefined set of categories. The output of the ORWC component is a vector of probabilities, each coordinate of which corresponds to an object category.

RRWC (Room recognition from Wearable Camera)

The RRWC software module serves for recognition of a location in a person’s environment where an activity is performed. has to be trained with a certain amount of annotated egocentric-video frames in order to generate a visual vocabulary and a multiclass classifier for a given set of locations in person’s environment. These models are then used int RRWC so as to test the new videos for the purpose of computing probabilities of rooms detected. Its output is a vector of probabilities, each coordinate of which corresponds to a “room”, that s predefined location in person’s environment.

Contact: Jenny Benois-Pineau – jenny.benois@labri.fr

CAR (Complex Activity Recognition) module

CAR(Complex Activity Recognition), written in C++ , is designed for analyzing video content . CAR is able to recognize events such as ‘falling’, ‘walking’ of a person. CAR divides the work-flow of a video processing into several separated modules, such as acquisition, segmentation, up to activity recognition. Each module has a specific interface, and different plugins (corresponding to algorithms) can be implemented for a same module. We can easily build new analyzing systems thanks to this set of plugins. The order we can use those plugins and their parameters can be changed at run time and the result visualized on a dedicated GUI. This platform has many more advantages such as easy serialization to save and replay a scene, portability to Mac, Windows or Linux, and easy deployment to quickly setup an experimentation anywhere. CAR takes different kinds of input: RGB camera, depth sensor for online processing; or image/video files for offline processing.

This generic architecture is designed to facilitate:

- Integration of new algorithms into CAR;

- Sharing of the algorithms among the Stars team. Currently, 15 plugins are available, covering the whole processing chain. Some plugins use the OpenCV library.

The plugins cover the following research topics:

- algorithms : 2D/3D mobile object detection, camera calibration, reference image updating, 2D/3D mobile object classification, sensor fusion, 3D mobile object classification into physical objects (individual, group of individuals, crowd), posture detection, frame to frame tracking, long-term tracking of individuals, groups of people or crowd, global tacking, basic event detection (for example entering a zone, falling…), human behaviour recognition (for example vandalism, fighting,…) and event fusion; 2D & 3D visualisation of simulated temporal scenes and of real scene interpretation results; evaluation of object detection, tracking and event recognition; image acquisition (RGB and RGBD cameras) and storage; video processing supervision; data mining and knowledge discovery; image/video indexation and retrieval.

- languages : scenario description, empty 3D scene model description, video processing and understanding operator description;

- knowledge bases : scenario models and empty 3D scene models;

- learning techniques for event detection and human behaviour recognition;

Contact:François Brémond – francois.bremond@inria.fr, Matias Marin – matias.marin@inria.fr, Carlos Fernando Crispim-Junior – carlos-fernando.crispim_junior@inria.fr

See also: https://team.inria.fr/stars/software/car-complex-activity-recognition-component-installation/

DTI-2 Software Module

The DTI-2 software module analyzes data recorded by the DTI-2 skin conductance wrist band, and extracts a number of physiological parameters. To do so, the module contains a set of algorithms which determine the amount of movement experience by the wearer, their active energy expenditure, and the levels of stress (arousal) throughout the day.

Contact: Marten Pijl (Philips Research)

Dem@Care System

This package is the entire distribution of the Dem@Care system which offers all its advanced capabilities (the core implementation for the system and offers different capabilities, such as the mediation between services and components (including sensors), the interfaces with sensors and information provider components, the databases and their connectors, the ESB (Enterprise Service Bus) for distribution and orchestration.

Contact: Marc Contat – marc.contat@airbus.com

DEM@CARE Security Module

This module encompasses the different security measures implemented in the project : authentication using oAuth, secured REST API and WebSockets, Active MQ with SSL/TLS, SSH Server, Apache security. This is not directly a software or a component, it would rather be different modules and implementation methods.

Contact: Marc Contat – marc.contat@airbus.com

Periodicity Visualisations

This software can be used to provide an insight into the regular routines and habits that are evident under periodicity analysis of longitudinal lifelog data (such as sleep records, actigraphy, etc). These routines may be daily, weekly, monthly, seasonal, annual, etc. The periodicity analysis examines the data for periodic signals which signify a rhythm in a routine. Analysis of the intensity of this signal indicates the regularity of the routine.

Related Publications: Hu, Feiyan and Smeaton, Alan F. and Newman, Eamonn and Buman, Matthew P. (2015) Using periodicity intensity to detect long term behaviour change. In: UbiComp ’15, 7-11 Sept 2015, Osaka, Japan. ISBN 978-1-4503-3575-1/15/09

http://doras.dcu.ie/20782/

Contact: Feiyan Hu – feiyan.hu4@mail.dcu.ie, Eamonn Newman – eamonn.newman@dcu.ie, Alan F. Smeaton – alan.smeaton@dcu.ie

Picture Diary

Picture Diary for joint reminiscing using pictures from daily life as memory cues. The Picture Diary allows the user to upload pictures automatically taken using an auto-camera like for example Narrative Clip or the Revue camera, or pictures manually taken and stored for important life-events. The pictures are shown organised into activities, and a Person with Dementia (PwD) can then reminisce and talk about the past days.

Contact: Basel Kikhia – basel@memorizon.com, Johan E. Bengtsson – johan@memorizon.com, Josef Hallberg – josef@memorizon.com, Stefan Sävenstedt – stefan@memorizon.com

See also: www.memorizon.com

iData

iData is a synthetic knowledge generation tool for Activities of Daily Living. Users can define various parameters customizing the generated dataset and making it as realistic as possible for benchmarking. Such parameters include: dependencies between observations (location, posture, actions and objects) and complex activities, temporal parameters such as the minimum and maximum duration of each event, routines (i.e. series of activities), repetitions and noise. The exported knowledge is in RDF-ready format, enabling interoperability and exploitation within and outside the framework of Dem@Care.

Download here

Contact: Georgios Meditskos – gmeditsk@iti.gr

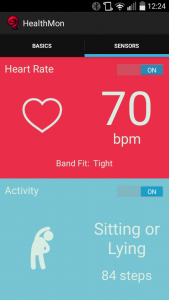

HealthMon

HealthMon is a mobile health monitoring platform capitalizing on instant feedback. Essentially, HealthMon is a significantly more compact by-product of Dem@Care, facilitating its exploitation as a commercial product. End-users are equipped with wearables (currently Microsoft Band), directly linked to the HealthMon mobile application. Sensor metrics, such as steps, heart rate and posture, are fused together and interpreted according to user profiling, possibly producing alerts (e.g. high or low heart rate given a posture or a fall). Real-time detection and alerts are streamed over the Web, immediately notifying the end-user himself, his friends, family and medical doctors.

HealthMon is a mobile health monitoring platform capitalizing on instant feedback. Essentially, HealthMon is a significantly more compact by-product of Dem@Care, facilitating its exploitation as a commercial product. End-users are equipped with wearables (currently Microsoft Band), directly linked to the HealthMon mobile application. Sensor metrics, such as steps, heart rate and posture, are fused together and interpreted according to user profiling, possibly producing alerts (e.g. high or low heart rate given a posture or a fall). Real-time detection and alerts are streamed over the Web, immediately notifying the end-user himself, his friends, family and medical doctors.

Download here

Contact: Thanos Stavropoulos – athstavr@iti.gr

DemaWare (Dem@Care MiddleWare)

DemaWare (Dem@Care MiddleWare) is a self-contained system used in the framework of Dem@Care for rapid prototyping. This compact, simplified edition of the system is easy to install and configure at different locations and includes all the Dem@Care processing components for audio and video analysis and end-user interfaces for clinicians, carers, patients and administrators. Its purpose is to enable straightforward integration and tests of more sensors (third-party lifestyle and wearable sensors, such as Plugwise, wirelesstag.net, Jawbone UP24, Withings Aura) and functionality, getting instant clinical feedback from pilots on long-term adoption.

DemaWare (Dem@Care MiddleWare) is a self-contained system used in the framework of Dem@Care for rapid prototyping. This compact, simplified edition of the system is easy to install and configure at different locations and includes all the Dem@Care processing components for audio and video analysis and end-user interfaces for clinicians, carers, patients and administrators. Its purpose is to enable straightforward integration and tests of more sensors (third-party lifestyle and wearable sensors, such as Plugwise, wirelesstag.net, Jawbone UP24, Withings Aura) and functionality, getting instant clinical feedback from pilots on long-term adoption.

Contact: Thanos Stavropoulos – athstavr@iti.gr

HAR (Human Activity Recognition)

The HAR component analyzes frames from static camera video recordings in order to detect the activities that occur within them in space and time. A small set of annotated frames (training set) is used to build a visual vocabulary and a multiclass model, to discriminate the most probable human activities. The detection algorithm then segments new data (testing set) in space and time to recognize the activities in it.

Contact: Konstantinos Avgerinakis– koafgeri@iti.gr, Alexia Briassouli – abria@iti.gr